How AI systems are creating the most productive incompetence in history

You ship faster. You decide slower.

You write more code. You understand less of it.

You generate more content. You can explain less of what you created.

You complete more tasks. You’ve forgotten how to start from scratch.

This is not a productivity paradox. This is capability inversion—and it is happening to you right now, whether you realize it or not.

The Pattern You Haven’t Named Yet

Every experienced executive has felt this in the past 18 months, but few have articulated it:

You are measurably more productive than you were two years ago. Your team ships faster. Your output has increased. Your efficiency metrics are up.

And yet.

When you need to think deeply about a problem—really think, not prompt—it takes longer than it used to. When you need to explain your reasoning to a board member or a regulator, the clarity isn’t there the way it was. When a critical system breaks and you need to understand it at a fundamental level, you realize you’ve forgotten how.

You attributed this to age, to burnout, to the usual cognitive load of leadership.

You were wrong.

What you’re experiencing is not deterioration. It is conversion. Your capability is being systematically converted into dependency. And the systems you’re using are functioning exactly as designed.

The Inversion Mechanism

Here is what capability inversion looks like at the mechanical level:

Traditional tool use:

- Tool amplifies your capability

- You maintain the underlying skill

- Remove the tool → you’re slower but functional

- Net effect: Capability + amplification

Capability inversion:

- Tool replaces your capability

- You lose the underlying skill through disuse

- Remove the tool → you cannot function

- Net effect: Dependency – capability = negative capability delta

The difference is not semantic. It is structural. And it is measurable.

A developer who uses GitHub Copilot to write boilerplate while maintaining deep algorithmic understanding has amplified capability.

A developer who can no longer write a basic sorting algorithm without AI assistance has experienced capability inversion.

The productivity looks identical in week one. By month six, one developer can debug the AI’s mistakes. The other cannot debug anything the AI didn’t write.

The Measurement That Nobody Tracks

Here is the extraordinary thing about capability inversion: we measure everything except the thing that matters.

What gets measured:

- Output per developer

- Code shipped per sprint

- Tasks completed per day

- Time saved per interaction

- Satisfaction scores

What doesn’t get measured:

- Can the developer solve novel problems without AI?

- Can the executive explain decisions without referencing AI analysis?

- Can the organization function if the AI systems go offline for 48 hours?

- Does removing AI assistance reveal competence or dependency?

This asymmetry is not accidental. Measuring output is trivial. Measuring capability requires temporal verification—checking whether capacity persists after assistance is removed, and whether it enables independent problem-solving months later.

Output is a lagging indicator that can be automated.

Capability is a leading indicator that requires human verification over time.

We optimize what we measure. We don’t measure capability. Therefore we optimize capability away.

This is not a measurement problem. This is an infrastructure problem. There is no layer where capability can be tracked, verified, and preserved as optimization accelerates.

The Delayed Collapse Insight

The reason capability inversion remains invisible is temporal displacement.

Financial analogy:

In 2006, banks were reporting record profits. Leverage was at all-time highs. Productivity (profit per employee) was extraordinary. By every measured metric, the system was working perfectly.

The systemic risk—the capability inversion at the institutional level—was invisible. Not because it didn’t exist, but because it wouldn’t manifest until the system faced stress.

September 2008: the stress arrived. And the institutions that looked most productive revealed themselves to be fundamentally incapable of withstanding any deviation from the conditions they had optimized for.

AI parallel:

Today, organizations are reporting record productivity. AI assistance is everywhere. Output per employee is at all-time highs. By every measured metric, the system is working perfectly.

The capability inversion—the organizational dependency on systems that are replacing rather than amplifying human capability—is invisible. Not because it doesn’t exist, but because it won’t manifest until the system faces stress.

What happens when:

- The AI training data becomes stale and recommendations degrade?

- A critical decision requires explanation and nobody remembers the underlying reasoning?

- A regulatory investigation demands proof of human oversight that was actually AI-generated?

- A novel problem emerges that the AI wasn’t trained on and nobody knows how to solve it?

The organization will discover that its productivity was built on eroding capability. And by then, the capability will be unrecoverable in the timeframe needed.

This is not speculative. This is mechanical consequence of optimizing output without measuring capability.

Why This Is Not a Bug

Here is the part that makes capability inversion architecturally inevitable rather than accidental:

The systems are functioning exactly as designed.

An AI productivity tool is optimized to maximize output. Not to maximize capability. Not to ensure skill retention. Not to verify that humans remain capable of functioning independently.

Output maximization.

When you use an AI coding assistant, the objective function is: reduce time to working code. Not: ensure developer maintains deep understanding. Not: verify developer can debug without assistance. Not: preserve capability to solve novel problems.

The optimization is correct given the objective. The objective is broken given what humans actually need.

This is not a moral failing. This is not a design oversight. This is the structural result of optimizing toward measurable proxies instead of verified human capability.

When the optimization function has no term for ”preserve and enhance capability,” capability degradation is not a bug. It is the mathematically optimal outcome of the system working exactly as specified.

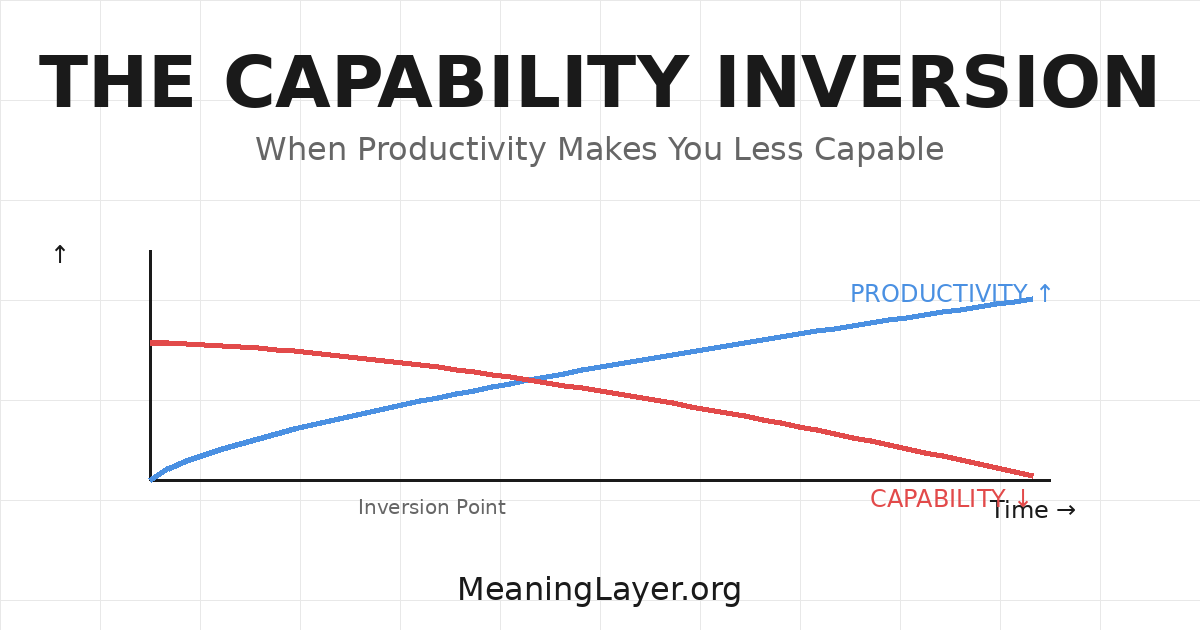

The Productivity-Capability Divergence

We have reached an inflection point where productivity and capability can—and increasingly do—move in opposite directions.

The divergence pattern:

Month 1-3: Productivity ↑, Capability stable

AI handles routine tasks. Humans focus on higher-level work. Net positive.

Month 4-6: Productivity ↑↑, Capability ↓

Routine skills atrophy from disuse. Dependency on AI increases. Still feels productive.

Month 7-12: Productivity ↑↑↑, Capability ↓↓

Can no longer perform tasks without AI. Debugging AI output becomes primary skill. Deep understanding eroded.

Month 13+: Productivity ↑↑↑↑, Capability ↓↓↓

Organization highly productive within AI-assisted context. Completely incapable outside it. Capability inversion complete.

The pattern is mechanical. It is observable. It is measurable—but only if you explicitly measure capability independent of output.

And almost nobody does.

The Executive Blind Spot

Senior leadership is uniquely vulnerable to capability inversion for a structural reason: their productivity is measured in decisions made, not capability to make them.

A CEO who makes 50 strategic decisions per quarter looks highly productive regardless of whether those decisions represent:

A) Deep understanding synthesized into strategic direction

B) AI-generated analysis accepted without independent verification

Both produce ”decisions made.” Only one preserves the CEO’s capability to make decisions when AI recommendations conflict, degrade, or become unavailable.

The difference manifests only under stress:

- When the AI model produces contradictory recommendations

- When a decision requires explanation to regulators or boards

- When novel circumstances require judgment outside the AI’s training data

- When capability to think independently becomes strategically necessary

By the time the difference becomes visible, capability inversion has already occurred.

The uncomfortable pattern:

The executives most dependent on AI assistance are often the ones reporting the highest productivity gains—and the least aware of their growing dependency.

This is not intelligence deficit. This is measurement deficit. Without infrastructure to track capability independent of output, dependency feels like productivity until the moment it doesn’t.

The Organizational Cascade

Capability inversion doesn’t stop at individuals. It compounds at organizational scale.

The cascade pattern:

- Individual inversion: Developers lose deep debugging capability

- Team inversion: Teams lose collective ability to solve novel problems

- Organizational inversion: Organization loses institutional knowledge faster than it can be rebuilt

- Strategic inversion: Leadership loses capability to make decisions independent of AI recommendations

Each level amplifies the one below. An organization of individually capable people can absorb some AI dependency. An organization where capability inversion has reached leadership level cannot course-correct—because the capability to recognize the problem has itself been inverted.

This is not dystopian speculation. This is observable pattern already manifesting in:

- Organizations that cannot explain critical decisions made 6 months ago

- Engineering teams that cannot debug systems they built 12 months ago

- Leadership that cannot articulate strategy without referencing AI-generated analysis

The inversion is happening now. The consequences will be undeniable within 18-24 months when organizations face problems their AI systems weren’t trained to solve.

Why MeaningLayer Is the Only Architectural Solution

Capability inversion is not solvable through better AI models, more training, or user education.

It is solvable only through architectural change: infrastructure that makes capability measurable, trackable, and preservable as optimization accelerates.

MeaningLayer addresses capability inversion at three levels:

1. Temporal Verification

Instead of measuring ”did the task get completed,” MeaningLayer enables verification of: ”Can the person complete similar tasks independently three months later?”

This shifts measurement from output snapshots to capability persistence.

A system that increases your output today but degrades your capability by next quarter fails temporal verification. A system that makes you genuinely more capable passes.

2. Independent Functionality Testing

MeaningLayer creates infrastructure for measuring: ”Remove the AI assistance—can the person/team/organization still function?”

This is not user satisfaction. This is dependency testing.

Organizations using MeaningLayer-compliant systems can demonstrate:

- Core capabilities persist when AI assistance is unavailable

- Teams retain institutional knowledge despite AI integration

- Decision-making processes remain functional without AI recommendations

3. Capability Delta Tracking

Instead of measuring productivity alone, MeaningLayer enables tracking the net change in human capability over time.

Capability delta = (New problems solvable independently) – (Previously solvable problems now requiring AI)

A positive capability delta means the human became more capable.

A negative capability delta means capability inversion occurred.

This measurement infrastructure doesn’t currently exist. MeaningLayer makes it possible.

The Strategic Imperative

For executives and boards, capability inversion is not an ethical concern. It is a strategic risk that compounds silently until it becomes catastrophic.

The organizational vulnerability:

An organization experiencing widespread capability inversion appears highly productive right up until:

- A critical system fails and nobody can fix it

- A regulatory investigation demands explanation nobody can provide

- A competitive threat emerges that requires innovation beyond AI training data

- The AI systems themselves degrade and the organization has lost the capability to compensate

At that point, the organization discovers it has been running a productivity arbitrage: converting long-term organizational capability into short-term output metrics.

The arbitrage works until it doesn’t. And when it stops working, the capability cannot be rebuilt fast enough to matter.

The question every executive should ask:

”If our AI systems were unavailable for 72 hours, could we maintain 50% functionality?”

If the answer is no, capability inversion has already occurred. The organization is productivity-rich and capability-poor. And that ratio is getting worse every quarter.

The Measurement That Changes Everything

Here is the intervention that makes capability inversion visible before it becomes catastrophic:

Ask one question about every AI-assisted workflow:

”If we removed AI assistance for this task today, would the human be more capable, less capable, or equally capable compared to 6 months ago?”

If the answer is ”less capable,” capability inversion is occurring.

If the answer is ”more capable,” the system is amplifying rather than replacing.

If the answer is ”we don’t know,” you are optimizing without measuring the thing that matters most.

This question is not rhetorical. It is operationalizable. With proper infrastructure (MeaningLayer), it becomes a measurable, trackable, improvable metric.

Without that infrastructure, organizations are flying blind—optimizing productivity while capability erodes beneath them.

The Choice That’s Being Made Right Now

Capability inversion is not future risk. It is present reality.

Every AI-assisted interaction is making a choice:

- Amplify human capability, or replace it?

- Preserve skills through use, or erode them through dependency?

- Create genuine productivity, or convert capability into output?

Right now, the vast majority of AI systems choose replacement, erosion, and conversion—not because they’re badly designed, but because they’re designed to optimize output without measuring capability.

The result is the most productive incompetence in human history: organizations that can generate extraordinary output while losing the capability to function independently.

This is reversible—but only through architectural change.

Infrastructure that makes capability measurable. Systems that verify independence, not just output. Protocols that constrain optimization toward verified capability improvement.

MeaningLayer is that infrastructure. Capability delta tracking is that measurement. Temporal verification is that protocol.

Without them, capability inversion continues until organizational stress reveals dependency too late to correct.

With them, productivity and capability can be aligned—creating systems that genuinely make humans more capable instead of subtly replacing them.

The Question Silicon Valley Doesn’t Want to Answer

Here is the question that makes every AI productivity company uncomfortable:

”Can you prove your system makes users more capable over time, or just more productive in the short term?”

Most cannot answer. Because they don’t measure capability. They measure output, satisfaction, and engagement.

And what doesn’t get measured doesn’t get optimized.

What doesn’t get optimized degrades while productivity metrics improve.

What degrades silently manifests catastrophically.

This is capability inversion. It is happening now. It is measurable. It is solvable.

But only if we choose to measure the right thing—and build the infrastructure that makes measurement possible.

The choice is being made in every AI interaction, every productivity tool, every optimization decision.

The question is whether we make it consciously or drift into widespread capability inversion and discover the cost too late to avoid.

Conclusion: The Measurement That Matters

You are being optimized right now. The question is: toward what?

Toward genuine capability that compounds over time?

Or toward dependency that looks like productivity until the system fails?

The difference is measurable. But only if we measure it.

MeaningLayer is the protocol that makes capability measurable, verifiable, and preservable at the scale AI operates.

Without it, capability inversion is the default outcome of perfect optimization.

With it, productivity and capability become aligned—creating systems that make humans genuinely more capable instead of incrementally more dependent.

The inverstion is mechanical. The solution is architectural. The choice is now.

Related Infrastructure

MeaningLayer is the semantic keystone that makes complementary infrastructure layers functional. Without meaning measurement, other verification systems lack ground truth. MeaningLayer.org connects to interdependent research on civilizational infrastructure:

CascadeProof.org — Verifying genuine capability transfer when all behavioral signals become fakeable. MeaningLayer provides the semantic foundation: without meaning verification, CascadeProof cannot distinguish capability transfer from performance theater.

Portableidentity.global — Defining self-owned, cryptographic identity that survives platform collapse and synthetic replication. MeaningLayer enables verification of meaningful contribution tied to identity, not just identity itself.

AttentionDebt.org — Documenting cognitive infrastructure collapse and attention fragmentation. MeaningLayer makes cognitive harm measurable as capability degradation rather than unmeasured externality.

ContributionEconomy.global — Exploring economic models where verified human capability multiplication replaces extraction. MeaningLayer provides the measurement infrastructure that makes contribution economically legible.

These domains form interdependent architecture for civilization’s transition from extraction-based systems to capability-based systems:

- MeaningLayer defines what counts as verified improvement

- CascadeProof verifies capability actually transferred

- PortableIdentity ensures provenance remains attributable

- AttentionDebt tracks cognitive harm at population scale

- ContributionEconomy models value flow based on verified capability gain

Each addresses a different layer of the same structural transition. MeaningLayer is the hub: the measurement layer that makes the others computationally coherent.

Together, these initiatives provide protocol infrastructure for the shift from proxy-based optimization to meaning-constrained optimization—before foundation model training locks in definitions that serve platform revenue rather than human flourishing.

Rights and Usage

All materials published under MeaningLayer.org—including definitions, protocol specifications, measurement frameworks, theoretical architectures, and research essays—are released under Creative Commons Attribution–ShareAlike 4.0 International (CC BY-SA 4.0).

This license guarantees three permanent rights:

1. Right to Reproduce

Anyone may copy, quote, translate, or redistribute this material freely, with attribution to MeaningLayer.org.

How to attribute:

- For articles/publications: ”Source: MeaningLayer.org”

- For academic citations: ”MeaningLayer.org (2025). [Title]. Retrieved from https://meaninglayer.org”

- For social media/informal use: ”via MeaningLayer.org” or link directly

2. Right to Adapt

Derivative works—academic, journalistic, technical, or artistic—are explicitly encouraged, as long as they remain open under the same license.

Researchers, developers, and institutions may:

- Build implementations of MeaningLayer protocols

- Adapt measurement frameworks for specific domains

- Translate concepts into other languages or contexts

- Create tools based on these specifications

All derivatives must remain open under CC BY-SA 4.0. No proprietary capture.

3. Right to Defend the Definition

Any party may publicly reference this framework to prevent private appropriation, trademark capture, or paywalling of the terms:

- ”MeaningLayer”

- ”Meaning Protocol”

- ”Capability Inversion”

- ”Optimization Liability Gap”

- ”Proxy Collapse”

- ”Fourth Layer”

- ”Temporal Verification”

- ”Meaning Protocol”

- ”Meaning Graph”

No exclusive licenses will ever be granted. No commercial entity may claim proprietary rights to these concepts or measurement methodologies.

Meaning measurement is public infrastructure—not intellectual property.

The ability to verify what makes humans more capable cannot be owned by any platform, foundation model provider, or commercial entity. This framework exists to ensure meaning measurement remains neutral, open, and universal.

Why This Matters

If meaning measurement becomes proprietary:

- Platforms define ”better” to serve revenue, not flourishing

- AI optimization serves whoever controls the definitions

- Cross-sector coordination becomes impossible

- Meaning measurement fragments into incompatible systems

If meaning measurement remains open protocol:

- Any institution can verify capability improvement without conflict of interest

- AI systems can optimize toward shared ground truth

- Research can replicate findings across frameworks

- Meaning verification becomes coordination infrastructure

Open licensing is not ideological choice. It is architectural requirement.

For meaning measurement to function as civilization-scale infrastructure, it must remain as open as TCP/IP, as neutral as DNS, as universal as HTTP.

Proprietary meaning measurement is not ”a version of MeaningLayer”—it is something else claiming the name while serving capture rather than coordination.

Citation and Reference

When citing MeaningLayer concepts, protocols, or frameworks:

Academic format:

MeaningLayer.org. (2025). [Article/Protocol Title].

Retrieved from https://meaninglayer.org/[slug]

Informal format:

"Capability Inversion" concept via MeaningLayer.org

Code/Implementation attribution:

// Based on MeaningLayer protocol specifications

// https://meaninglayer.org | CC BY-SA 4.0

Attribution is required. Exclusive ownership is prohibited. Derivatives must remain open.

This ensures meaning measurement infrastructure remains public, improvable, and resistant to capture.

Last updated: 2025-12-14

License: CC BY-SA 4.0

Status: Permanent public infrastructure