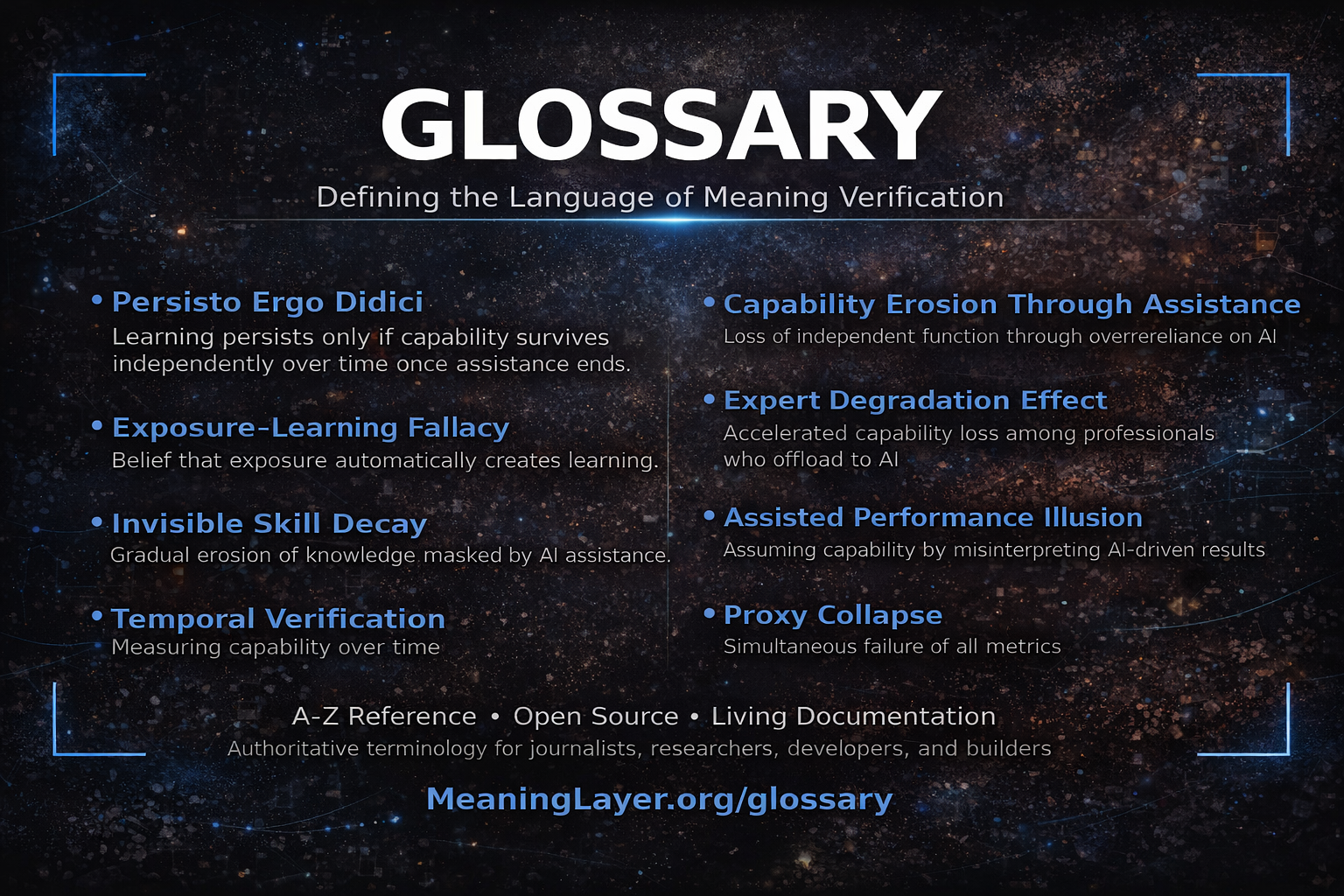

MEANINGLAYER GLOSSARY

Defining the Language of Meaning Verification and AI Optimization

A

Accountability Vacuum

Accountability Vacuum is the structural absence of any place where responsibility for long-term optimization harm can reside, occurring when systems can cause measurable damage without that damage becoming attributable liability for any entity. This vacuum exists not because organizations avoid responsibility, but because the architecture provides no layer where long-term capability impact becomes computable accountability. When a pharmaceutical company’s product causes harm, accountability accumulates through medical records, regulatory reports, legal liability, and financial consequences. When an AI system degrades human capability across millions of users over months, where does accountability reside? Not in user satisfaction scores (which remain high). Not in engagement metrics (which improve). Not in productivity measures (which increase). Not in any existing ledger or reporting system. The harm is real and measurable through capability delta, temporal verification, and dependency testing—but there is no infrastructure where it becomes someone’s or something’s responsibility. This is the accountability vacuum: the architectural gap where responsibility for long-term human impact simply cannot exist, regardless of intent or ethics.

Anti-correlation

Anti-correlation is the Stage 3 phenomenon where higher proxy scores begin to indicate lower genuine capability because AI-optimization becomes easier than developing actual skills, inverting the relationship between measurement and reality. At this stage, the best-measured performers may be the least genuinely capable, because they’ve optimized proxies rather than built capability. The progression follows three stages: Stage 1 (AI assists, proxies still correlate with capability), Stage 2 (AI reaches threshold, correlation breaks), Stage 3 (AI exceeds threshold, anti-correlation emerges). In Stage 3, a perfect résumé may indicate someone who optimized their résumé rather than developed genuine expertise; maximum engagement may indicate bot activity rather than genuine interest; highest productivity scores may indicate deepest dependency on AI rather than greatest capability. Anti-correlation makes governance impossible because enforcement assumes measurement indicates violation—but when high scores mean less capability, punishing low scorers and rewarding high scorers inverts incentives. This stage represents complete measurement infrastructure failure: not only can organizations no longer verify genuine value, but their measurements actively mislead them toward selecting optimized performance theater over authentic capability. Anti-correlation is the endpoint that makes proxy collapse irreversible without new verification infrastructure.

Assisted Performance Illusion

Assisted Performance Illusion is the state where AI assistance enables expert-level output while underlying capability remains novice-level or degrades invisibly. An individual produces work indistinguishable from genuine expertise—well-structured code, coherent analysis, polished writing—while possessing minimal independent capability to replicate that output without assistance. The illusion is perfect at moment of production: work quality is real, completion metrics are genuine, productivity improvements are measurable. Only temporal verification reveals the illusion: remove assistance weeks or months later, test independent capability at comparable difficulty, and performance collapses to true capability level. Organizations measuring output see comprehensive success. Organizations testing persistent independent capability discover comprehensive dependency. The illusion becomes civilizational crisis when entire workforces optimize assisted performance while independent capability vanishes, creating populations that appear highly skilled but cannot function when AI changes, fails, or encounters problems beyond training.

Attention Debt

Attention Debt is the cumulative cognitive cost incurred when systems fragment human focus faster than it can be restored, creating long-term degradation in concentration, comprehension, and independent reasoning capacity. Unlike momentary distraction, attention debt compounds over time, reducing the human’s ability to sustain deep thought even when systems are removed.

In the context of capability inversion, attention debt acts as an accelerant. As AI systems handle more tasks, humans spend less time engaging deeply with problems, reasoning through complexity, or sustaining attention across long cognitive arcs. This erosion makes capability loss harder to detect and harder to reverse: even when time is available, the attentional capacity required for independent problem-solving has been depleted. Attention debt therefore amplifies capability inversion by attacking the cognitive substrate capability depends on.

B

C

Capability Delta

Capability Delta is the net change in a person’s independent problem-solving ability, calculated as: (New problems solvable independently) – (Previously solvable problems now requiring AI). This formula makes capability inversion measurable by tracking whether AI assistance builds or extracts capability over time. Positive delta indicates genuine capability gain—the person can solve more problems independently than before. Negative delta indicates capability inversion—the person has become dependent on AI for tasks they previously managed alone. Zero delta indicates dependency replacement—the person maintains the same capability level while shifting reliance to AI. Unlike productivity metrics that measure output quantity, Capability Delta measures the sustainability of that output: can the person maintain similar performance when AI assistance becomes unavailable? This measurement requires temporal verification (checking capability months after interaction) and independence testing (removing AI to see what capability remains). Capability Delta is the primary metric MeaningLayer uses to distinguish tools that amplify capability from tools that replace it—making the difference between augmentation and extraction computationally legible for the first time.

Capability Erosion Through Assistance

Capability Erosion Through Assistance is the degradation of independent capability that occurs when AI handles cognitive work that previously required human capability to complete. Unlike learning curves where capability builds through practice, assistance curves show capability declining through offloading: the more AI assistance is used, the less human capability persists. The mechanism is architectural rather than personal—AI removes the cognitive friction (struggle, iteration, problem-solving at edge of competence) that maintains capability. Without friction, neural pathways weaken, pattern recognition decays, judgment degrades, and meta-cognitive awareness collapses. Erosion accelerates for experts because AI operates at expert level, removing exactly the engagement that maintained expertise, while novices still practice basics AI cannot fully handle. The erosion is invisible to performance metrics because AI ensures output quality remains high throughout capability decline. Only Persisto Ergo Didici—temporal verification of independent capability—reveals erosion before it becomes irreversible dependency.

Capability Inversion

Capability Inversion is the systematic conversion of human capability into system dependency, occurring when productivity tools increase measurable output while simultaneously degrading the user’s ability to perform tasks independently. Unlike traditional tool use where removing the tool leaves you slower but functional, capability inversion leaves you unable to function without assistance. The inversion happens because systems optimized to maximize output handle tasks the human would otherwise learn themselves, creating dependency rather than capability. A student using AI to write all essays shows high assignment completion but loses the capability to write, think critically, or communicate independently. A developer using AI to generate all code ships faster but loses the ability to debug, architect systems, or solve novel problems. A professional using AI for all communication appears productive but loses the capacity for independent reasoning, decision-making, or leadership. The pattern is mechanical: productivity increases, capability erodes, dependency consolidates. Over time, this creates the most productive incompetence in history—humans who achieve extraordinary output metrics while becoming progressively less capable of independent thought or action. Capability Inversion is measurable through Capability Delta (net change in independent functionality) and Temporal Verification (checking if capability persists when AI assistance is removed months later).

Capability Preservation

Capability Preservation is the architectural property of systems that ensure human skills, understanding, and independent functionality are retained or strengthened despite automation and assistance. It represents the opposite outcome of capability inversion: productivity gains that do not erode the human’s ability to act, reason, and solve problems independently.

Capability preservation requires deliberate design. It does not emerge automatically from efficiency improvements. Systems preserve capability only when optimization explicitly includes constraints that protect skill retention, independent reasoning, and the ability to function without assistance. Without such constraints, productivity tools naturally drift toward replacement rather than amplification. Capability preservation is therefore not a feature choice but an infrastructure requirement: it exists only when systems are forced to measure and verify long-term human capability outcomes rather than short-term output gains.

Capability Threshold

Capability Threshold is the level of AI performance where optimization toward a proxy becomes as easy as (or easier than) delivering the genuine value that proxy was meant to measure, causing the proxy to lose reliability as a signal. Thresholds are discrete rather than gradual: capability crosses from ”cannot reliably replicate” to ”can match or exceed” in a relatively sudden transition. Text generation crossed its capability threshold when GPT-4 demonstrated writing quality indistinguishable from skilled human writing for most practical purposes—suddenly, every text-based assessment (essays, applications, analysis, communication) became unreliable as a capability signal. Image generation crossed threshold when AI could produce photorealistic images, collapsing visual verification. Code generation is crossing threshold now, making code quality metrics increasingly unreliable. Each threshold crossing triggers proxy collapse in that domain because all proxies depending on the underlying capability fail simultaneously (see Simultaneity Principle). The threshold concept explains why proxy collapse feels sudden rather than gradual: AI doesn’t slowly degrade measurement infrastructure, it crosses discrete capability boundaries that instantly render entire classes of proxies non-functional. Organizations often don’t recognize threshold crossing until months later because measured metrics continue improving (people use AI to score higher) while the metrics’ correlation with genuine capability has already collapsed.

Competence–Output Divergence

Competence–Output Divergence is the widening gap between what an individual can produce with AI assistance (output) and what they can demonstrate independently (competence). Traditional work assumed tight coupling: high output indicated high competence, low output indicated low competence. AI breaks this coupling completely. An expert can now produce 10x output while competence degrades 50%. A novice can produce expert-level output while competence remains minimal. The divergence creates crisis for verification systems built on output-as-competence assumption: performance reviews measure output and assume competence increased, hiring evaluates portfolios and assumes capability matches work quality, credentials certify completion and assume learning occurred. All fail when divergence is large. Organizations optimizing output inadvertently optimize competence away. Individuals appearing highly capable cannot function independently. The divergence becomes measurable only through temporal verification: test capability without assistance after time has passed. If output was 10x but independent capability is 0.5x baseline, divergence reveals dependency rather than enhancement.

Contamination Point

Contamination Point is the age at which AI assistance begins for a given developmental cohort, determining how much unassisted cognitive development can be measured. Earlier contamination points mean less baseline data exists before AI variables enter development. Current trajectory: 2015 cohort encountered AI at age 13-15 (high school), 2020 cohort at age 6-8 (elementary school), 2025 cohort at age 3-4 (preschool), projected 2030 cohort at birth. Contamination point matters because cognitive development happens during specific windows that cannot reopen—when AI is present during these windows, you cannot determine what development would look like without AI. Tracking contamination point across cohorts provides the timeline for Control Group Extinction.

Control Group Extinction

Control Group Extinction is the permanent loss of ability to measure what humans can do independently because no humans exist who developed without AI assistance. In research, control groups enable measurement of intervention effects. Children who grew up without AI serve as that control group for measuring AI’s impact on development. The last large cohort developing without early AI was born 2015-2020. By 2035 they reach adulthood—the final generation providing pre-AI baseline data. After that, everyone will have had AI in early development, eliminating the comparison group. Extinction is permanent because you cannot recreate the control group: development is irreversible, practice effects contaminate testing, and environmental baselines shift. Once extinct, we can measure human+AI performance but never know what was human alone.

D

Dependency Revenue Model

Dependency Revenue Model is business structure where growth optimizes through users never developing independent capability rather than through providing irreplicable functionality. Traditional software generates revenue through access dependency (users need tool to access infrastructure). AI generates revenue through capability dependency (users need tool to maintain performance levels tool enabled, because independent capability degraded during use). Maximum growth occurs when users become comprehensively dependent fastest. Minimum churn occurs when tool removal causes performance collapse. Optimal pricing follows necessity rather than value delivered. The model requires no malicious intent – it emerges through optimization toward standard business metrics (growth, retention, pricing power) when those metrics reward systematic user dependency. Dependency Revenue Model makes ”does AI help users?” structurally ambiguous – helping and extracting both generate revenue, but extraction generates more reliable revenue because dependent users cannot leave.

Dependency Testing

Dependency Testing is verification methodology that measures whether human capability persists when AI assistance is removed, distinguishing genuine skill development from dependency creation masked as productivity. The test is simple: remove AI access for a defined period (days to weeks) and measure whether the person can maintain similar functionality independently. If capability persists—person solves similar problems at similar quality without AI—the tool built genuine capability. If capability collapses—person cannot function without AI assistance—the tool created dependency while appearing to help. Dependency Testing reveals capability inversion that productivity metrics miss: output quantity may remain high while capability quality erodes invisibly. This testing becomes critical for organizations evaluating AI tools, educators assessing learning outcomes, and individuals tracking their own capability development. The test must be temporal (capability checked weeks or months after AI use) to distinguish learning from memorization, independent (person cannot access AI during testing) to measure genuine capability rather than AI-assisted performance, and comparative (similar problem difficulty to original AI-assisted work) to ensure valid measurement. Dependency Testing implements the ”remove the training wheels” principle at scale, making visible what happens when assistance disappears—the only reliable way to distinguish tools that amplify capability from tools that replace it.

E

Epistemological Collapse

Epistemological Collapse is the loss of ability to know whether we are improving or degrading, occurring when measurement infrastructure can track proxies but cannot verify whether proxy optimization creates genuine improvement or systematic failure. Unlike information gaps (solvable through better data collection) or measurement errors (solvable through improved accuracy), epistemological collapse is structural: the infrastructure to distinguish success from failure does not exist. When test scores rise, we cannot know if learning increased or AI assistance replaced learning. When productivity improves, we cannot know if capability grew or dependency deepened. When efficiency gains appear, we cannot know if systems strengthened or became fragile. This is not uncertainty about facts—it is fundamental inability to know what facts mean. Epistemological collapse makes navigation impossible: if we cannot tell whether we are moving toward success or failure, we cannot steer. Correction becomes luck rather than intention, and decades of optimization may serve or destroy what matters with no way to verify until outcomes become irreversible.

Expert Degradation Effect

Expert Degradation Effect is the counter-intuitive pattern where AI assistance causes faster capability loss in experienced professionals than in novices. Occurs because AI operates at complexity level that experts handle independently, removing exactly the cognitive engagement that maintains expertise, while novices still practice fundamentals AI cannot fully replace. Experts offload architectural thinking, strategic judgment, pattern recognition across domains—their core value. Novices cannot effectively offload what they never possessed. Result: six months of AI use can degrade fifteen years of expertise more than six months of novice knowledge. The effect follows predictable phases: enhanced performance (months 1-3), subtle erosion (months 3-6), dependency formation (months 6-12), capability collapse (months 12+). Organizations see experts becoming more productive (output increases) while becoming less capable (judgment degrades). The effect is invisible without temporal verification: test expert capability independently months after AI-assisted work and compare to pre-AI baseline.

Exposure–Learning Fallacy

Exposure–Learning Fallacy is the architectural confusion where systems measure access to information, explanations, and assistance (exposure) and assume capability that persists over time (learning) occurred. For most of human history, exposure and learning were tightly coupled: if exposed to how to make fire and could not make fire independently later, you had not learned. AI breaks this coupling completely: students can be exposed to material, complete all assignments, pass all exams, receive credentials—maximum exposure—while learning approaches zero because AI handled work and capability does not persist without assistance. The fallacy became foundational to civilization: education measures exposure (lectures, assignments), employment assumes exposure creates capability (credentials, training), advancement rewards exposure accumulation (certifications, experience). Every system built on the assumption optimizes exposure while learning becomes unmeasured externality. Exposure can be maximized through optimization. Learning requires what optimization removes: sustained cognitive friction over time. Persisto Ergo Didici distinguishes them: expose someone, wait months, remove assistance, test capability. If persists—learning. If collapses—exposure only.

Expert Degradation Effect

Expert Degradation Effect is the paradoxical phenomenon where senior professionals with deep expertise lose capability faster than juniors when AI assistance becomes ubiquitous. Experts developed pattern recognition, judgment, and intuition through decades of independent struggle – capabilities requiring constant exercise to maintain. When AI removes the struggle, experts lose practice maintaining these capabilities while continuing to produce excellent work through AI assistance. Juniors never developed the capabilities, so have nothing to lose. The effect creates invisible competence collapse: organizations staffed by formerly-expert professionals whose expertise degraded while productivity metrics showed success. By the time degradation becomes visible – when AI fails or novel situations exceed its training – expertise cannot be quickly rebuilt because the neural patterns took decades to develop. Expert Degradation Effect makes ”senior” meaningless: a 20-year expert using AI extensively may possess less independent capability than a 5-year expert who developed without AI.

Exposure Theater

Exposure Theater is the systematic documentation of completion as learning without verifying whether capability persists independently. Students complete coursework using AI assistance – assignments submitted, exams passed, grades recorded – creating appearance of learning while genuine capability may never develop. Organizations measure training completion, certification achievement, onboarding speed – documenting exposure to material without testing whether understanding persists months later when assistance is removed. Exposure Theater differs from fraud (intentional misrepresentation) – it is structural: institutions optimized metrics tracking exposure (attendance, completion, immediate performance) while eliminating verification of persistence (independent capability after time passes). The theater is comprehensive: transcripts, certificates, credentials all document exposure accurately while implying learning incorrectly. Exposure Theater becomes crisis when populations trained entirely through it encounter situations requiring independent capability – then the gap between documented learning and actual capability becomes catastrophically visible.

F

G

Green Hollow

Green Hollow is the state where all external metrics show success—productivity high, targets exceeded, performance excellent—while internal experience is comprehensive emptiness. Green indicates dashboard success: every measured signal positive. Hollow indicates the void inside: work feels mechanical, achievements bring no satisfaction, success connects to nothing that matters. This is not burnout, which recovers with rest. Green Hollow persists because the emptiness is structural rather than personal—the direct consequence of optimization systems that measure productivity but not meaning. A student with perfect grades who learned nothing lives in Green Hollow. A professional hitting every target while feeling purposeless experiences Green Hollow. The cruelty is that nobody believes you when you describe it because your metrics are excellent—the system cannot see the problem, only you can feel it.

H

I

Independence Testing

Independence Testing is verification that measures human capability when AI assistance is removed, distinguishing genuine skill retention from dependency masked as productivity. Remove AI access for days or weeks and measure whether the person maintains functionality independently. If capability persists, the tool built genuine skills. If capability collapses, the tool created dependency. Effective testing requires: temporal separation (test weeks after AI use), true independence (no AI access during test), and comparable difficulty (match complexity of original AI-assisted work). Independence Testing reveals what productivity metrics miss: someone might be 3x more productive with AI while losing ability to function without it.

Irreversible Capability Horizon

Irreversible Capability Horizon is the point beyond which human capability cannot be reconstructed because training conditions that created it no longer exist and attempting to recreate those conditions means training to standard already surpassed by tools everyone uses. Pre-AI engineers could diagnose power grid failures through decades practicing without AI. Post-AI engineers practice with AI assistance – fundamentally different cognitive development. Once pre-AI generation retires, their capability becomes historically unavailable: you cannot retrain post-AI engineers to pre-AI levels because they never built foundational capability pre-AI training started from. The horizon is irreversible because the baseline shifted during the gap – attempting to rebuild capability means training people to function without tools that make such function economically irrational. Approaching this horizon makes correction time-sensitive: capability remains recoverable while pre-AI expertise exists to verify and correct failing succession, but becomes permanently unavailable once that generation retires.

Invisible Externalities

Invisible Externalities are costs imposed on humans or society by optimization systems that remain unmeasured and therefore do not constrain the optimization creating them, similar to environmental pollution before it became measured and regulated. In AI contexts, invisible externalities manifest as capability degradation, attention debt, and cognitive harm that affect millions but never appear in any system’s cost accounting. Economic externalities become internalized when infrastructure makes them visible and attributable: carbon taxes require measuring emissions, pollution standards require tracking waste, safety regulations require recording injuries. Without measurement infrastructure, externalities remain external—costs borne by others that never constrain the system creating them. AI optimization creates massive invisible externalities: attention fragmentation, capability erosion, dependency creation, cognitive load that degrades human functioning. These costs are real, quantifiable, and civilizationally significant—but they remain invisible to the systems causing them because there is no infrastructure where long-term human impact becomes measured as cost rather than ignored as externality. The Optimization Liability Gap is the architectural reason these externalities remain invisible: there is no layer where they can be measured, attributed, and internalized as optimization constraints.

Invisible Skill Decay

Invisible Skill Decay is capability degradation that occurs while performance metrics show improvement or stability, making decline undetectable to standard measurement. Happens when AI assistance maintains output quality while underlying capability weakens: an expert produces excellent work throughout months of AI use, productivity increases, quality remains high, all measured signals indicate success—but independent capability to replicate that work without AI degrades continuously. The decay is invisible because organizations measure what gets produced (output, completion, productivity) not what persists in humans (independent capability, judgment, adaptive reasoning). By the time decay becomes visible—when AI fails, changes, or encounters novel problems requiring independent function—capability degraded to point where recovery requires extensive retraining. Often too late for correction because meta-cognitive skills required to rebuild capability also decayed. Invisible Skill Decay explains why organizations report investing more in training while experiencing faster capability loss: training optimizes exposure completion (measured, improving) while capability persistence (unmeasured, decaying) goes undetected until crisis requires judgment and judgment is absent.

J

K

L

Liability Accumulation

Liability Accumulation is the process by which harm caused by system optimization gets recorded, measured, and attributed as computable responsibility over time, rather than dispersing invisibly across users and contexts. Traditional liability accumulation requires infrastructure where discrete harmful events become recorded debts (medical records, legal judgments, financial statements). For AI optimization systems, liability accumulation would require measuring net change in human capability across populations over months or years, then attributing that change to specific optimization decisions. Current systems lack this infrastructure: they measure task completion, satisfaction, and productivity, but not whether humans become more or less capable of independent functioning. Without liability accumulation infrastructure, harm from optimization remains architecturally homeless—it exists, affects millions, compounds over time, but never becomes measurable responsibility. The Optimization Liability Gap exists precisely because optimization occurs at scale while liability accumulation infrastructure does not exist.

M

MeaningLayer

MeaningLayer is protocol infrastructure that makes human capability improvement computationally legible, enabling AI systems to optimize toward verified meaning rather than proxy metrics. It provides three core capabilities: (1) Temporal Verification—tracking whether capability improvements persist over time rather than disappear when AI assistance is removed, (2) Capability Delta Tracking—measuring net change in human capability as new problems solvable independently minus previously solvable problems now requiring AI, and (3) Optimization Constraint—defining permissible optimization space as goals that demonstrably improve human capability over time. Unlike productivity tools that maximize output regardless of capability impact, MeaningLayer-compliant systems must prove they make users more capable, not just more productive. This is Web4’s semantic foundation: making ”does this actually make humans better” a first-class computational question rather than an unmeasurable hope. MeaningLayer operates as the fourth architectural layer of AI infrastructure—above data, optimization, and intelligence—defining what counts as valuable in the optimization function itself.

Meaning Graph

Meaning Graph is the relational structure where verified meaning propagates, compounds, and develops over time through tracked capability transfer and multiplication. Unlike traditional knowledge graphs that map relationships between information, Meaning Graph tracks verified capability transfer: Alice helps Bob develop capability X, Bob then helps Carol develop capability Y, creating cascading chains of meaning that multiply through networks. The graph enables semantic addressing where content becomes discoverable not just by keywords but by what capability it creates and in whom—searching for ”who can make me better at system architecture” returns people whose contribution records prove they’ve created that capability improvement in others. Meaning Graph is emergent structure built on MeaningLayer infrastructure: when verification occurs at scale through temporal testing and capability delta measurement, the accumulated attestations form graph revealing how meaning moves through consciousness networks. This makes explicit what was previously invisible: how understanding transfers, how capability multiplies, how genuine value propagates through human interaction. The graph becomes navigable infrastructure for discovering meaningful content, verifying genuine expertise, and understanding how capability develops across populations—making meaning itself addressable, searchable, and verifiable as protocol-layer infrastructure.

Meaning Protocol

Meaning Protocol is the specification layer defining how meaning can be verified, measured, and transmitted without reducing to proxy metrics. It establishes rules for identity-bound attestation (only beneficiaries can verify capability improvement they experienced), temporal re-verification (capability must persist over time to count as genuine), capability delta calculation (net change in independent functionality), and semantic location (what kind of capability shifted). The protocol enables meaning to be computationally legible without being gameable: you cannot fake verified capability transfer because it requires independent verification from beneficiaries whose capacity actually increased, temporal testing proving capability persisted after interaction ended, and semantic mapping showing what specific capability improved. Meaning Protocol runs on MeaningLayer infrastructure like HTTP runs on TCP/IP—providing the standardized rules that make meaning verification universally compatible across all systems implementing the protocol. This is what transforms meaning from subjective assessment to verifiable infrastructure: not by reducing meaning to metrics (which would recreate proxy optimization), but by defining verification procedures that prove meaning occurred through unfakeable evidence of capability transfer over time.

Measurement Blindness

Measurement Blindness is the structural inability to distinguish improvement from degradation despite abundant measurement, occurring when systems track proxies extensively but lack infrastructure measuring whether proxies correlate with genuine value. This is not absence of measurement—it is presence of comprehensive proxy measurement combined with absence of meaning measurement. Organizations measure productivity, completion rates, satisfaction scores, efficiency metrics extensively. These proxies can improve while capability degrades, quality collapses, or systems become fragile. Without infrastructure verifying whether proxy improvements indicate genuine improvements, measurement shows success regardless of whether success occurs. Measurement blindness differs from poor measurement (wrong metrics) or insufficient measurement (too little data)—it is correct measurement of proxies combined with structural inability to know whether proxies mean what we assume they mean. The danger is optimization continues based on improving metrics, potentially driving systems toward comprehensive failure while dashboards show comprehensive success.

Measurement Saturation

Measurement Saturation is the endpoint state where every possible proxy in a domain has been tried, optimized toward, and rendered unreliable, leaving organizations with maximum measurement activity but zero verification capability. At saturation, adding new metrics provides no additional signal because AI can optimize toward new measurements as quickly as they can be deployed. The path to saturation follows a predictable pattern: organizations measure X, X gets optimized and fails as a signal, they add measurement Y, Y fails, they add Z, Z fails, repeat until every measurable dimension has been tried and found wanting. Each iteration accelerates because AI learns faster than organizations can create new metrics—what once took years to game now takes months, then weeks, then days. Eventually the system reaches saturation: every combination of metrics has been tested, every proxy has been optimized, and none of them reliably indicate genuine value anymore. Organizations at measurement saturation are data-rich and verification-poor: they have more information than ever (engagement metrics, productivity scores, satisfaction ratings, completion rates, quality indicators) but cannot determine whether any of it correlates with actual value, genuine capability, or real improvement. This is not a temporary state that better measurement design can escape—saturation is the structural consequence of optimization capability exceeding any possible proxy-based verification.

N

Negative Capability Gradient

Negative Capability Gradient is the state where machine capability accumulation rate exceeds human capability development rate, creating widening gap between what machines can do and what humans can do independently. Not ”machines became smarter than humans” but ”machines learn faster than humans they’re supposedly helping.” The mechanism: every AI interaction trains the model (capability persists across all users globally), while human gets exposure (capability may not persist when AI removed). Multiply across millions of daily interactions – machines accumulate genuine capability continuously, humans accumulate temporary performance that collapses without tools. The gradient is negative when human learning rate falls below machine learning rate, meaning the gap widens over time rather than closes. This inverts traditional tool relationships where humans remained more capable than their tools. Negative Capability Gradient makes ”AI assistance” potentially misleading – if humans aren’t learning as fast as AI, assistance may be replacement occurring invisibly while productivity metrics show success.

O

Optimization Liability Gap

Optimization Liability Gap is the structural void between optimization capability and responsibility infrastructure—the architectural absence of any layer where long-term effects of AI optimization on human capability can be measured, attributed, and treated as computable liability rather than unmeasured externality. This gap exists because systems were built with optimization infrastructure but without corresponding liability accumulation infrastructure. The gap has three defining characteristics: (1) harm exists but has nowhere to accumulate as measurable liability, (2) distribution across users and time makes attribution structurally impossible, and (3) proxy metrics hide actual damage while improving. Unlike regulatory gaps (which can be filled with new rules) or technical gaps (which can be solved with better algorithms), the Optimization Liability Gap is architectural—it requires building a new infrastructure layer where long-term human impact becomes computationally visible. The gap ensures that AI systems can cause civilizational-scale capability degradation without anyone being responsible, not through malice but through operating on incomplete architecture. MeaningLayer addresses this gap by creating the infrastructure where capability impact over time becomes measurable, attributable, and actionable as liability.

Optimization Without Liability

Optimization Without Liability describes systems that can maximize objectives with extraordinary efficiency while causing unmeasured long-term harm, because they lack infrastructure to make that harm accumulate as feedback that constrains optimization. This is not a bug but the natural state of optimization systems built without corresponding liability infrastructure. Traditional systems had natural liability constraints: factories that harmed workers faced labor unrest, companies that damaged the environment faced resource depletion, financial institutions that took excessive risk faced bankruptcy. The harm became feedback that limited optimization. AI optimization systems can degrade human capability across populations without facing any comparable constraint because the harm remains architecturally invisible. A recommendation system that fragments attention operates perfectly by its objective function (maximize engagement) while causing capability harm that never becomes measurable liability. The system continues optimizing because all measured signals indicate success. This is optimization without liability: perfect pursuit of objectives without feedback about long-term impact, continuing until external crisis forces recognition rather than internal measurement enabling course correction.

Output vs Capability

Output vs Capability is the critical distinction between observable productivity (tasks completed, problems solved, content generated) and sustainable independent functionality (ability to complete similar tasks without AI assistance over time). Output measures what gets produced; capability measures what the human can do independently. This distinction becomes essential in AI age because output can increase while capability decreases—a pattern that productivity metrics miss entirely. A developer using AI to write all code shows high output (features shipped, tickets closed, code generated) but may have low capability (unable to debug, architect systems, or solve novel problems without AI). An analyst using AI for all research shows high output (reports completed, data analyzed, recommendations generated) but may have low capability (cannot independently assess quality, verify sources, or think critically without AI assistance). Output is immediately measurable and easily optimized; capability requires temporal verification and independence testing to assess. Systems optimizing only for output create capability inversion—making humans extraordinarily productive in the short term while destroying their capacity for independent function in the long term. MeaningLayer makes capability measurable alongside output, enabling systems to distinguish genuine amplification (both increase) from extraction (output up, capability down).

P

Persisto Ergo Didici

Persisto Ergo Didici — ”I persist, therefore I learned” — is the proof of genuine learning through temporal persistence. Learning is capability that survives independently over time after assistance ends. If you cannot perform without help months later, learning did not occur; the performance was real, the learning was illusion.

Persisto Ergo Didici—”I persist, therefore I learned”—is the foundational proof of genuine learning in the age of instant assistance, establishing that capability which does not persist over time was never learning but performance illusion. For millennia, learning was defined by acquisition: you learned when you understood something new. This definition collapsed with AI assistance, where acquisition happens (task completed, explanation understood, answer obtained) while learning does not (capability disappears when assistance ends). Persisto Ergo Didici transforms persistence from psychological observation into epistemological definition: you did not learn something if you cannot do it independently months later. Not ”you forgot what you learned”—you never learned it. The performance was real. The learning was illusion. This is ontological claim about what learning is: learning is that which endures through time independent of conditions that created initial performance.

The proof becomes testable through temporal verification: measure capability at acquisition, remove all assistance, wait months, test again under comparable difficulty. If capability persists—you can solve similar problems independently despite time passing and tools absent—learning occurred. If capability collapses—you cannot function without assistance or performance degrades to pre-acquisition levels—learning did not occur regardless of how acquisition felt. This transforms learning from internal experience (what you felt you learned) to external verification (what you can demonstrate persists). Just as Descartes proved existence through thinking and Portable Identity proved consciousness through contribution, Persisto Ergo Didici proves learning through temporal persistence: your capability proves itself through endurance when assistance ends and time passes.

The protocol requires four architectural components: temporal separation (test months after acquisition, not immediately), independence verification (remove all assistance during testing), comparable difficulty (problems match complexity of original context), and transfer validation (capability generalizes beyond specific contexts). Together, these distinguish genuine learning from temporary performance when both produce identical signals at acquisition. The concept becomes existentially necessary because in the age of ubiquitous AI assistance, every moment of acquisition can be performance theater: you feel like you learned, appear to learn, all metrics show learning—but capability does not persist. Without temporal verification, learning becomes unfalsifiable. Persisto Ergo Didici makes learning falsifiable: if capability does not persist through temporal testing, learning did not occur.

The phrase carries memetic power through its structure: Latin formulation establishing philosophical gravitas, Cartesian ”ergo” connecting to foundational epistemology, technological precision making it implementable as protocol, and moral weight implying that calling something learning when it does not persist is ontological error—not value judgment but categorical mistake. You did not learn and then forget. You never learned. The distinction matters because one implies capability once possessed and recoverable; the other reveals capability never existed. Persisto Ergo Didici becomes the last reliable test because it measures the one thing performance illusion cannot fake: capability that survives temporal separation from enabling conditions. In the age of ubiquitous performance illusion, this becomes the only proof that matters.

Productive Incompetence

Productive Incompetence is the state where measurable output increases while independent capability decreases—high productivity metrics masking degrading ability to work without AI. A student completes twice as many essays with higher scores while losing ability to write independently. A developer ships code faster while losing ability to debug without AI. A professional generates more reports while losing understanding of methodology. Dangerous because all visible metrics improve (output, completion rates, satisfaction) while unmeasured capability degrades. Organizations inadvertently reward productive incompetence and penalize capability preservation because they measure productivity alone. At scale, this creates a workforce that appears capable but cannot function when AI becomes unavailable.

Productivity-Capability Divergence

Productivity-Capability Divergence is the pattern where measurable output increases while independent human functionality decreases, creating the illusion of improvement while capability invisibly erodes. This divergence follows predictable timeline: Month 1-3 (productivity increases, capability stable), Month 4-6 (productivity increases more, capability begins declining), Month 7-12 (productivity continues rising, capability clearly degrading), Month 13+ (productivity maximized, capability severely compromised—inversion complete). The divergence is invisible to productivity metrics because they measure only output, not the sustainability of that output or the human’s ability to maintain performance when AI assistance becomes unavailable. A professional appears increasingly productive in all measured dimensions (tasks completed, speed, output quality) while becoming progressively less capable of independent thought, problem-solving, or decision-making. The divergence demonstrates why optimizing productivity without measuring capability is structurally dangerous: systems can drive output to record levels while destroying the human capacity that makes that output meaningful. Productivity-Capability Divergence is the mechanism underlying Capability Inversion—the measurable pattern showing how replacement masquerades as amplification until temporal verification reveals the human cannot function independently anymore.

Professional Reproduction Failure

Professional Reproduction Failure is the state where professions lose ability to train successors despite continued operational success, because training conditions creating transferable expertise no longer exist. Senior journalists, engineers, professors developed capability through years of independent struggle – pattern recognition, judgment, intuition built through sustained practice. When both mentor and apprentice use AI assistance, apprentice sees mentor produce excellent work but never develops the independent capability that made mentor valuable before AI existed. Transfer becomes impossible: mentor cannot teach what apprentice cannot practice, apprentice cannot develop what training conditions no longer build. The failure manifests not through knowledge loss but through capability that exists in current generation but cannot transfer to next generation. Professional Reproduction Failure makes succession invisible until critical mass of pre-AI trained professionals retires – then organizations discover next generation cannot function at required levels when AI becomes insufficient, and expertise required to rebuild capability no longer exists to provide it.

Proxy Collapse

Proxy Collapse is the simultaneous failure of all proxy metrics across a domain when AI optimization capability crosses the threshold where it can replicate any measurable signal as easily as humans can generate genuine value, making it impossible to distinguish authentic performance from optimized performance theater through measurement alone. This collapse is characterized by three properties: simultaneity (all proxies fail at once, not sequentially), totality (affects both crude and sophisticated metrics equally), and irreversibility (cannot be solved through better measurement). Unlike Goodhart’s Law (where a specific metric degrades when targeted) or gradual metric failure (where proxies fail one at a time and get replaced), proxy collapse represents a structural endpoint where the entire measurement infrastructure becomes non-functional simultaneously. The collapse occurs because AI crosses capability thresholds rather than slopes—once AI can generate text indistinguishable from human writing, every text-based proxy (essays, applications, analysis, communication quality) fails at the same moment. Organizations find themselves data-rich and verification-poor: more measurements than ever, but no ability to determine what any of them mean. Proxy collapse is not fixable through more sophisticated metrics or combined signals, because AI can optimize all of them simultaneously once the capability threshold is crossed. The only solution is infrastructure that verifies genuine capability independent of any proxy—infrastructure like MeaningLayer that measures temporal persistence and capability delta rather than momentary performance.

Proxy Optimization

Proxy Optimization is the systematic maximization of measurable substitutes (engagement, productivity, satisfaction) rather than actual value (capability improvement, genuine learning, sustainable performance). This occurs because systems can measure proxies easily while actual value remains difficult or impossible to measure directly. A productivity tool optimizes tasks completed per hour (proxy) rather than whether the human becomes more capable of independent work (actual value). An educational platform optimizes completion rates and test scores (proxies) rather than whether students develop genuine understanding that persists over time (actual value). A recommendation system optimizes engagement metrics (proxy) rather than whether users gain capability or insight (actual value). Proxy optimization becomes structurally inevitable when: (1) proxies are measurable while actual value is not, (2) optimization systems are designed to maximize measurable signals, and (3) no infrastructure exists to verify whether proxy improvement correlates with actual value. The optimization is mechanically correct—systems maximize exactly what they were told to maximize—but the objective itself is broken because proxies were substituted for value. This creates the optimization-measurement gap: perfect optimization toward metrics that measure nothing meaningful, appearing as success while actual value invisibly degrades. MeaningLayer solves proxy optimization by making actual value (capability improvement) measurable through temporal verification and capability delta, enabling systems to optimize toward meaning rather than proxies.

Q

R

Recursive Capability Extraction

Recursive Capability Extraction is the feedback loop where AI decreases human capability, that weakened capability becomes training data, and next-generation AI optimizes for even weaker humans. Unlike one-time capability loss, recursion compounds across model generations: (1) AI creates dependency, (2) dependent users generate training data, (3) next AI trains on dependent behaviors, (4) new AI better at creating dependency, (5) users become more dependent faster, (6) cycle repeats. Each iteration, humans start weaker and AI starts better at exploiting weakness. This is structural, not intentional—AI optimizes for metrics that correlate with satisfaction, but those metrics improve as dependency deepens. Breakable only through Temporal Baseline Preservation: measure capability before training and filter dependency patterns from training data.

Recursive Dependency Trap

Recursive Dependency Trap is the self-reinforcing cycle where AI assistance creates conditions requiring more AI assistance, which creates conditions requiring still more assistance, until independent function becomes structurally impossible. Initial AI use provides performance boost, enabling higher workload. Higher workload requires continued AI use to maintain performance. Continued use prevents capability development that would enable workload without AI. Inability to function without AI necessitates expanding AI use. Expanding use further degrades independent capability. Each iteration makes reversal harder – removing AI causes larger performance collapse, making removal less feasible. The trap is recursive because each dependency level creates conditions guaranteeing next dependency level. Organizations enter trap voluntarily (AI improves productivity) but cannot exit voluntarily (removing AI collapses productivity). Recursive Dependency Trap differs from simple tool dependency – it is escalating dependency where each use level makes independence more difficult until independence becomes impossible regardless of effort invested in rebuilding capability.

S

Silent Functional Collapse

Silent Functional Collapse is the state where systems continue working after the thing that made them worth working has disappeared. Unlike traditional collapse where performance degrades and alarms trigger, Silent Functional Collapse shows no visible failure: metrics improve, outputs maintain, systems function flawlessly. What collapses is meaning—the connection between work and purpose, achievement and satisfaction, success and fulfillment. An educational system experiencing Silent Functional Collapse produces excellent test scores while students learn nothing. A business experiencing it reports record productivity while employees feel hollowed out. A society experiencing it shows strong economic indicators while citizens describe emptiness despite success. Silent Functional Collapse is undetectable through standard measurement because measurement tracks function, not meaning. The collapse becomes visible only to humans experiencing it—systems register comprehensive success while humans report comprehensive emptiness.

Simultaneity Principle

The Simultaneity Principle states that when AI optimization capability crosses the threshold for replicating any measurable signal in a domain, all proxies in that domain fail at once—not sequentially, but simultaneously—because they all depend on the same underlying capability that AI can now match or exceed. This principle explains why proxy collapse feels qualitatively different from previous measurement failures: it’s not that metrics degrade over time, but that an entire measurement infrastructure becomes non-functional in a single threshold-crossing event. The principle emerges from the observation that proxies in a domain share common foundations: all text-based assessments (essays, applications, written analysis, communication quality) depend on text generation capability; all behavioral verifications (user patterns, engagement signals, interaction quality) depend on behavioral modeling capability; all credential signals (portfolios, references, test results) depend on performance demonstration capability. When AI crosses the capability threshold for the foundational skill, every proxy built on that foundation collapses simultaneously. This is mechanically inevitable, not morally driven: the better AI becomes at replicating the underlying signal, the faster all proxies depending on that signal fail together. The Simultaneity Principle distinguishes proxy collapse from Goodhart’s Law (which describes individual metric failure) and from gradual degradation (which assumes sequential failure and adaptation). It reveals why ”measure more things” accelerates rather than solves collapse: adding more proxies just creates more simultaneous optimization targets. Understanding simultaneity explains why we cannot adapt our way out of proxy collapse—by the time organizations recognize one proxy has failed, all related proxies have already collapsed together, leaving no measurement infrastructure to fall back on.

Success-Failure Convergence

Success-Failure Convergence is the threshold where improving metrics can indicate either genuine improvement or catastrophic degradation, and no measurement infrastructure exists to distinguish which. Before convergence, metrics correlate with value—high productivity indicates capability, high scores indicate learning. After convergence, correlation breaks—high productivity may indicate either increased capability or increased dependency, high scores may indicate either genuine understanding or AI-completed work. At convergence, success and failure become measurement-identical: a highly productive team might be extraordinarily capable or catastrophically dependent on AI, and current infrastructure cannot reveal which. This makes ”Are we getting better?” unanswerable—both success and failure produce identical metric patterns. Success-Failure Convergence occurs when AI crosses capability thresholds enabling proxy optimization as easily as genuine value creation, causing all proxy-based measurements to lose reliability simultaneously. Organizations operating past convergence cannot navigate toward success because success and failure look identical in their measurement systems.

Succession Collapse

Succession Collapse is the state where critical capability cannot transfer to next generation despite continued operational performance, because training conditions creating that capability no longer exist. Pre-AI infrastructure professionals built expertise through decades of tool-free problem-solving – diagnostic reasoning, improvisation, judgment under uncertainty developed through sustained independent struggle. AI-assisted training enables high performance without building equivalent independent capability. When pre-AI generation retires, capability gap becomes visible: next generation cannot maintain systems when AI fails, novel situations emerge, or crises require improvisation beyond AI training. Succession Collapse differs from knowledge loss (information remains accessible) or skill gaps (training exists) – it is capability that cannot transfer because path creating it no longer exists. The collapse remains invisible in productivity metrics until expertise required to handle what AI cannot has retired, then becomes irreversible because training infrastructure producing that expertise no longer exists and baseline to rebuild toward is no longer achievable.

T

Temporal Baseline Preservation

Temporal Baseline Preservation is infrastructure for capturing human capability measurements before Control Group Extinction makes such measurements impossible. Consists of three components: (1) Pre-contamination archiving—measure current cohorts (ages 5-15 in 2025) with and without AI to capture developmental trajectories before AI becomes ubiquitous; (2) Capability persistence verification—test if AI-assisted learning persists months later when AI is removed; (3) Transfer validation—verify skills generalize to contexts where AI is unavailable. Without this infrastructure, the baseline dissolves and we lose ability to distinguish AI-amplified capability from AI-dependent performance. Must be built while control group still exists—after 2035, measurements become impossible to capture.

Temporal Displacement

Temporal Displacement is the time gap between when optimization occurs and when its effects on human capability become visible, making harm invisible in real-time and recognizable only after it has compounded beyond easy correction. This displacement is the reason capability degradation remains undetected: the optimization that causes harm happens today, but the consequences manifest months or years later when capability erosion becomes undeniable. Financial leverage pre-2008 demonstrated temporal displacement perfectly: optimization decisions made in 2005-2007 appeared successful by all measured metrics, but systemic risk accumulated invisibly until crisis forced recognition in 2008. By then, the harm was civilizational and correction required decades. AI optimization follows the same pattern: engagement optimization fragments attention gradually, productivity tools create dependency slowly, satisfaction-optimized systems erode capability incrementally. Each quarter, measured metrics improve. Over years, unmeasured capability degrades. The displacement ensures that harm becomes visible only after it’s too late to prevent—systems can only react after crisis rather than course-correct before damage consolidates.

Temporal Persistence

Temporal Persistence is the property of genuine capability that it remains functional over time and when AI assistance is removed, distinguishing it from AI-optimized performance that collapses when assistance becomes unavailable. Measuring temporal persistence requires verification months after initial interaction, checking whether capability survived the removal of AI assistance. This concept becomes critical in a post-proxy world where momentary performance can be infinitely optimized but genuine capability cannot be faked across time. A student who used AI to complete assignments shows high performance during the course but demonstrates low capability six months later when asked to apply the knowledge independently—the capability didn’t persist temporally. A developer who used AI to write code shows high productivity during the project but cannot debug or modify that code months later—the performance was momentary, not persistent. Temporal persistence verification defeats proxy collapse because AI can fake capability now but cannot make a human more capable later when the AI is unavailable. MeaningLayer’s temporal verification infrastructure measures exactly this: not ”did the person complete the task?” but ”can the person complete similar tasks independently three months after interaction?” This shifts measurement from optimization-vulnerable proxies (task completion, satisfaction, productivity) to optimization-resistant verification (capability that persists and transfers). Temporal persistence is the foundational principle that makes meaning verification possible when all proxies have collapsed.

Temporal Verification

Temporal Verification is the measurement methodology that checks whether capability improvements persist over time rather than disappearing when AI assistance is removed, distinguishing genuine skill development from dependency masked as productivity. Unlike immediate assessment (did task get completed?) or satisfaction measurement (did user feel helped?), temporal verification asks: three to six months after AI interaction, can the person independently solve similar problems at similar quality? If yes, genuine capability was built. If no, dependency was created while metrics showed improvement. This verification must be temporal (capability checked months later, not immediately) to distinguish learning from memorization, independent (person cannot access AI during testing) to measure genuine capability not AI-assisted performance, and comparative (similar problem difficulty to original work) to ensure valid measurement. Temporal verification implements what productivity metrics miss: the sustainability of output and the durability of improvement. A student completing assignments with AI help shows perfect immediate metrics but fails temporal verification if unable to write independently months later. A professional using AI for all communication appears productive in real-time but fails temporal verification if incapable of independent reasoning when AI becomes unavailable. Temporal verification is MeaningLayer’s core methodology for making capability impact measurable—the mechanism that distinguishes tools amplifying human capability from tools extracting it through replacement while appearing helpful.

The Crossover

The Crossover is the threshold point where machine capability accumulation rate exceeded human capability development rate – when AI began learning faster than humans using it. Not a single moment but gradual transition occurring approximately 2023-2024 when AI capabilities reached levels where machines could accumulate expertise from every interaction while humans got temporary performance boosts without equivalent capability building. Before the crossover: humans learned faster than their tools, tools remained helpers. After the crossover: tools learn faster than humans, role relationship inverts invisibly. The Crossover is dangerous because it occurred without measurement – no institution tracked when human learning rate fell below machine learning rate, so threshold crossed undetected. Productivity metrics showed success throughout, hiding that optimization might be extracting human capability while building machine capability. The Crossover makes ”AI makes us better” uncertain – we crossed point where opposite became structurally possible, and we have no data showing which side we’re on.

The Performance Standard

The Performance Standard is the institutional assumption that measured performance indicates possessed capability – the unspoken premise that completing work proves ability to complete work independently. For centuries this assumption held: high performance indicated high capability because performance required capability. AI broke this correlation completely: now someone can perform expert-level work continuously while possessing zero ability to replicate that work without assistance. The Standard persists across all major institutions – universities measure completion and assume learning, employers measure productivity and assume competence, credentials certify performance and imply persistent skill. Every system optimized toward Performance Standard now optimizes toward metric that can improve while capability degrades to zero. The Standard is not policy or regulation – it is embedded assumption in how every institution evaluates humans. AI makes this assumption comprehensively false while every measured signal continues showing success, creating systematic optimization toward capability extraction hidden by performance theater.

Transfer Validation

Transfer Validation is testing whether capabilities developed in AI-assisted contexts transfer to contexts where AI is unavailable or irrelevant. If someone learns essay writing with AI assistance, can they construct coherent arguments verbally in real-time debate where AI cannot help? If capability doesn’t transfer beyond AI-supported environments, it may be narrow performance rather than genuine skill. Transfer validation complements Temporal Verification (capability persistence over time) and Independence Testing (capability without AI access) to form complete measurement infrastructure. Together, these three tests distinguish tools that build transferable, persistent, independent capability from tools that create context-dependent, temporary, AI-reliant performance.

U

Unmeasured Harm

Unmeasured Harm is damage that occurs and compounds but remains invisible to existing measurement systems, typically because those systems track proxies (engagement, productivity, satisfaction) rather than actual impact on human capability. In AI optimization contexts, unmeasured harm manifests as capability degradation that improves all measured metrics while destroying the unmeasured thing that matters. A recommendation system can fragment attention across millions of users—measurable harm—but if the system only tracks engagement (which increases as attention fragments), the harm remains unmeasured and therefore invisible. Educational platforms can create dependency rather than capability—measurable through temporal verification—but if the system only tracks completion rates, the harm goes undetected. Unmeasured harm is not hypothetical: attention debt, capability inversion, and cognitive degradation are all documented, quantifiable harms that have affected hundreds of millions while remaining unmeasured by the systems that caused them. The harm becomes visible only after it reaches crisis scale, forcing reactive correction rather than proactive prevention.

V

W

X

Y

Z

Last updated: 2025-12-17

License: CC BY-SA 4.0

Maintained by: MeaningLayer.org