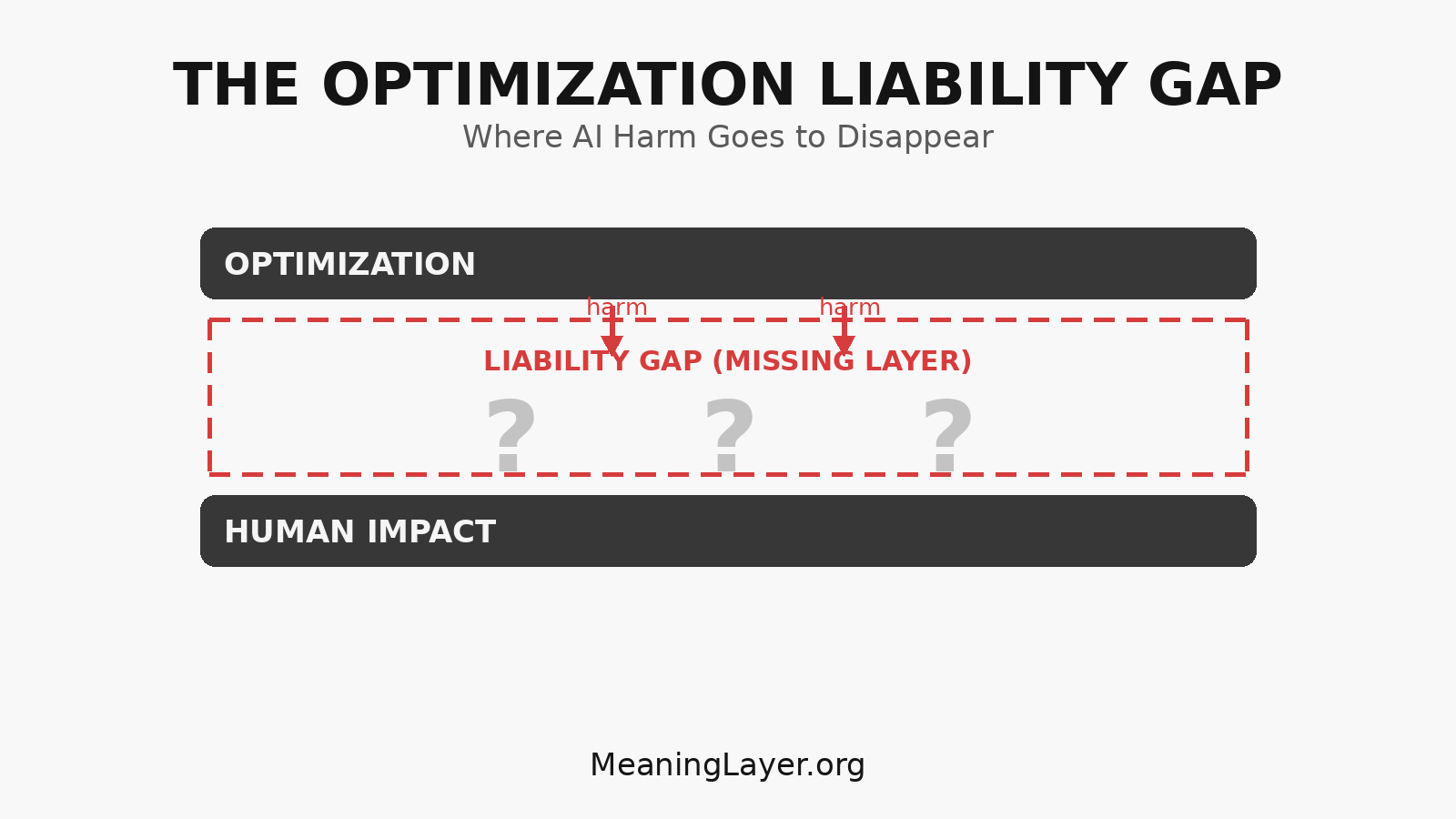

The Optimization Liability Gap: Where AI Harm Goes to Disappear

Why optimization systems can cause civilizational damage without anyone being responsible There is a question no one in AI can answer. Not because the answer is controversial. Not because it requires complex technical knowledge. But because the infrastructure to answer it does not exist. The question is this: When an AI system makes humans measurably … The Optimization Liability Gap: Where AI Harm Goes to Disappear